selenium实现百度登陆与指数提取

需求

http://index.baidu.com/?tpl=trend&word=itools

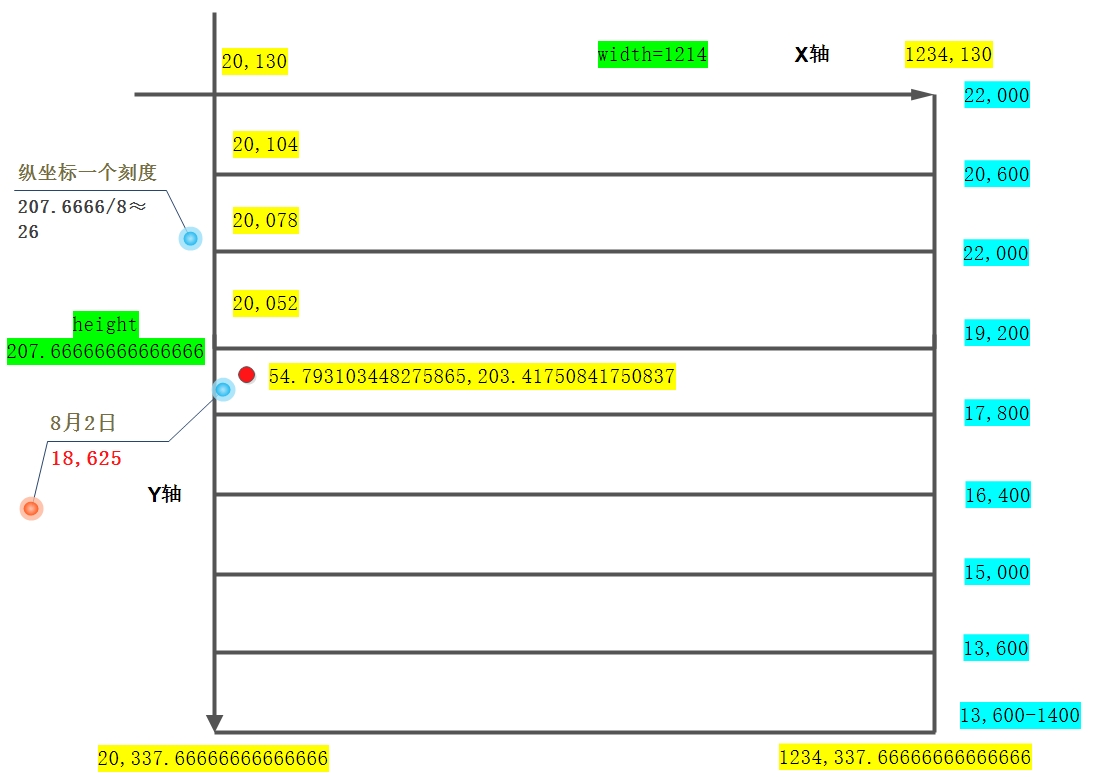

三次贝塞尔曲线分析

M20,209.71043771043767

C20,209.71043771043767,37.403792291215744,202.91564252551038,54.793103448275865,203.41750841750837

C72.18241460533599,203.91937430950637,73.20310901938423,205.95708808566553,89.58620689655173,211.80808080808077

C105.96930477371923,217.65907353049602,107.03607008639847,227.22833278337725,124.37931034482759,228.58922558922558

C141.7225506032567,229.9501183950739,142.51636522414827,213.07945078549736,159.17241379310346,218.10101010101005

C175.82846236205864,223.12256941652274,178.44993667348015,252.18578642065503,193.96551724137933,260.05387205387206

C209.4810978092785,267.92195768708905,211.42970775670906,263.68409090917896,228.75862068965517,262.1515151515151

C246.0875336226013,260.6189393938513,246.2535151946967,251.9137883963013,263.55172413793105,253.76094276094273

C280.8499330811654,255.60809712558415,280.953233267576,270.9573693100153,298.3448275862069,270.54208754208753

C315.7364219048378,270.1268057741598,318.03951864381804,260.3049404318127,333.1379310344828,251.66329966329965

C348.23634342514754,243.02165889478658,352.22734445560417,238.1724636145718,367.93103448275866,230.68686868686865

C383.63472450991316,223.2012737591655,385.3765270266145,216.79701324539502,402.72413793103453,218.10101010101005

C420.0717488354545,219.40500695662507,420.2680561610064,234.72023581086987,437.51724137931035,236.97979797979792

C454.7664265976143,239.239360148726,459.27137746988086,240.10553324989965,472.3103448275862,228.58922558922558

C485.3493121852916,217.0729179285515,489.7547176782527,135.00391473049126,507.1034482758621,136.29292929292924

C524.4521788734716,137.58194385536723,529.3926083978823,285.7163490857493,541.896551724138,297.8114478114478

C554.4004950503937,309.90654653714626,559.2931034482759,304.1043771043771,576.6896551724138,304.1043771043771

C594.0862068965517,304.1043771043771,594.0934474636296,297.30958191944984,611.4827586206897,297.8114478114478

C628.8720697777499,298.3133137034458,630.6164737870624,298.6241860439508,646.2758620689656,306.20202020202015

C661.9352503508686,313.77985436008953,664.461123342158,328.29261708828153,681.0689655172414,333.4713804713804

C697.6768076923248,337.66666666666663,698.9227646961804,335.33605774965736,715.8620689655173,331.37373737373736

C732.8013732348542,327.41141699781735,733.5081181472079,319.62597101028564,750.6551724137931,316.6902356902357

C767.8022266803783,313.7545003701857,768.1759444027329,316.7126491983071,785.4482758620691,318.78787878787875

C802.7206073214052,320.86310837745043,802.8520681532848,325.582673972806,820.2413793103449,325.080808080808

C837.630690467405,324.57894218881006,837.6451716015606,317.1921015822337,855.0344827586207,316.6902356902357

C872.4237939156809,316.18836979823766,873.1531736773215,318.02292520225126,889.8275862068966,322.98316498316495

C906.5019987364716,327.94340476407865,907.4736353885872,334.73093134661667,924.6206896551724,337.66666666666663

C941.7677439217576,337.66666666666663,942.578941522853,337.66666666666663,959.4137931034484,335.56902356902356

C976.2486446840436,331.1840738372781,977.1386719183727,322.1517634107601,994.2068965517242,318.78787878787875

C1011.2751211850757,315.4239941649974,1029,320.8855218855218,1029,320.8855218855218

rect矩形信息

<rect x="20" y="130" width="1214" height="207.66666666666666" r="0" rx="0" ry="0" fill="#ff0000" stroke="none" opacity="0" style="-webkit-tap-highlight-color: rgba(0, 0, 0, 0); opacity: 0;"></rect>

右侧的标尺

<img id="trendYimg" style="position: absolute; left: 1129px; top: 137px;" src="/Interface/IndexShow/getYaxis/?res=LWJGOX8PdwAFFgE0KQAdVw0vF3V%2FM3YeDksXYzRaAAgMIxskLFd1H3ECJT5TRxQSCVcwORcsBXwhJwsqE0ZkJn4DNzxaBhl8QzVBKzZtIXAKMn4gdmt7BRoZCTVPIDsWMz9IMAVbfxkhEAwiOFZ4FAkQUi9CBwEhVnAlBBofDhsuMF4ZeVRlIjBCOkA4YC0MAWI8DC02MlRhEjIUPlhCNAAjEy4SAkYROmIWHQFrFXYqCRxORSggK0onYRsiDRQWJCgCU380LDcU&res2=bPEXSTR7htcHoxnlmzMmLgSCjXbEFWycT6i3M5VHoHwRcxsgjpzZGvy544.271bP&max_y=6bGp&min_y=6bo&axis=Pb5">

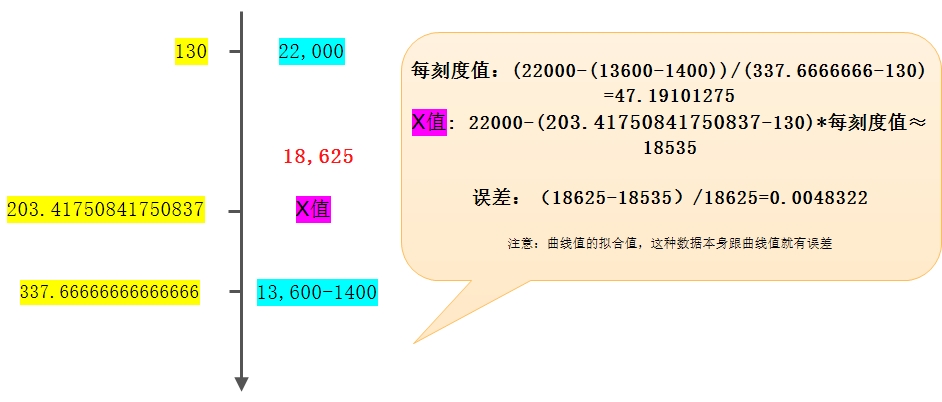

分析

计算

源码分析

类webdriver chrome操作

# -*- coding: utf-8 -*-

import datetime

import os

import re

import time

import urllib

from time import sleep

import requests

from selenium import webdriver

from selenium.webdriver.common.by import By

from selenium.webdriver.support import expected_conditions as EC

from selenium.webdriver.support.ui import WebDriverWait

from utils.reg_ocr import getverify

class BD_Crawler(object):

def __init__(self, userName, pwd, chromedriver, data_dir):

'''

初始化 driver

:param userName:账户

:param pwd: 密码

:param chromedriver:webdirver

'''

os.environ["webdriver.chrome.driver"] = chromedriver

options = webdriver.ChromeOptions()

options.add_argument('--user-data-dir=' + data_dir) # 设置成用户自己的数据目录

# option.add_argument('--user-agent=iphone') #修改浏览器的User-Agent来伪装你的浏览器访问手机m站

# option.add_extension('d:\crx\AdBlock_v2.17.crx') # 自己下载的crx路径

self.driver = webdriver.Chrome(executable_path=chromedriver, chrome_options=options)

# self.driver = webdriver.Chrome(chromedriver)

self.userName = str(userName)

self.pwd = pwd

def login(self):

'''

判断是否需要登录

:return:

'''

try:

if self.driver.find_elements_by_class_name('compInfo'):

return True

WebDriverWait(self.driver, 30).until(

EC.presence_of_element_located((By.NAME, 'userName'))

)

userName = self.driver.find_element_by_name("userName")

userName.send_keys(self.userName)

password = self.driver.find_element_by_name("password")

password.send_keys(self.pwd)

submit = self.driver.find_element_by_xpath('//*[@class="pass-form-item pass-form-item-submit"]')

submit.submit()

print '请检查是否有验证码,手动输入'

sleep(10)

while self.driver.find_element_by_xpath('//*[@class="pass-verifyCode"]'):

try:

if self.driver.find_element_by_xpath(

'//*[@class="pass-success pass-success-verifyCode" and @style="display: block; visibility: visible; opacity: 1;"]'):

submit = self.driver.find_element_by_xpath('//*[@class="pass-form-item pass-form-item-submit"]')

submit.submit()

break

except:

pass

sleep(6)

return True

except:

return False

pass

else:

return False

def getTimeSpan(self):

try:

self.driver.implicitly_wait(20) # seconds

# 获取时间范围

time_span = self.driver.find_elements_by_class_name('compInfo')[4].text

from_day = time_span.split()[0]

to_day = time_span.split()[-1]

from_daytime = datetime.datetime.strptime(from_day, "%Y-%m-%d").date()

to_daytime = datetime.datetime.strptime(to_day, "%Y-%m-%d").date()

alldays = (to_daytime - from_daytime).days

self.driver.implicitly_wait(5) # seconds

return from_daytime, alldays

except:

return -1

def downloadImageFile(self, keyword, imgUrl):

local_filename = '../photos/' + keyword + time.strftime('_%Y_%m_%d') + '.png'

print "Download Image File=", local_filename

r = requests.get(imgUrl, cookies=self.getCookieJson(),

stream=True) # here we need to set stream = True parameter

with open(local_filename, 'wb') as f:

for chunk in r.iter_content(chunk_size=1024):

if chunk: # filter out keep-alive new chunks

f.write(chunk)

f.flush()

f.close()

return local_filename

def getCookieJson(self):

cookie_jar = {}

for cookie in self.driver.get_cookies():

name = cookie['name']

value = cookie['value']

cookie_jar[name] = value

return cookie_jar

def webcrawler(self, keyword):

'''

百度指数控制函数

:return:

'''

self.driver.get('http://index.baidu.com/?tpl=trend&word=' + urllib.quote(keyword.encode('gb2312')))

# self.driver.maximize_window()

self.login()

try:

# 判断form表单ajax加载完成标记:id属性

WebDriverWait(self.driver, 20).until(

EC.presence_of_element_located((By.ID, 'trend'))

)

except:

pass

try:

for i in range(1, 4):

if self.driver.find_element_by_class_name('toLoading').get_attribute('style') != u'display: none;':

break

sleep(5)

except:

pass

for i in range(1, 4):

from_daytime, alldays = self.getTimeSpan()

if alldays < 0:

self.driver.refresh()

else:

break

sleep(2)

for i in range(1, 4):

try:

# 获取所有的纵坐标的点

svg_data = re.search(r'<path fill="none" stroke="#3ec7f5"(.*?)" stroke-width="2" stroke-opacity="1"',

self.driver.page_source).group(1)

break

except:

self.driver.refresh()

sleep(2)

pass

points = []

for line in re.split('C', svg_data):

tmp_point = re.split(',', line)[-1]

points.append(tmp_point)

# 判断时间天数差 与 points个数 是否一致

if len(points) != alldays + 1:

return

# self.driver.save_screenshot('aa.png') # 截取当前网页

CaptchaUrl = self.driver.find_element_by_id('trendYimg').get_attribute('src') # 定位坐标尺度

pic_path = self.downloadImageFile(urllib.quote(keyword.encode('gb2312')), CaptchaUrl)

reg_txt = getverify(pic_path)

MaxValue = float(reg_txt.split()[0].replace(',', ''))

MinValue = float(reg_txt.split()[-1].replace(',', ''))

kedu = (MaxValue - MinValue) / (len(reg_txt.split()) - 1)

indexValue = []

for index, point in enumerate(points):

day = from_daytime + datetime.timedelta(days=index)

Xvalue = MaxValue - (float(point) - 130) * (MaxValue - (MinValue - kedu)) / 207.6666666

indexValue.append({'day': day, 'value': Xvalue})

return indexValue

def test_ocr():

pic_path = r'..\photos\love_2016_08_31.png'

reg_txt = getverify(pic_path)

if __name__ == '__main__':

test_ocr()

验证码识别:定义reg_ocr.py

# -*- coding: utf-8 -*-

from PIL import Image

from PIL import ImageEnhance

from pytesseract import *

def getverify(name):

'''

图片识别模块

:param name:图片path

:return:

'''

# 打开图片

im = Image.open(name)

# 使用ImageEnhance可以增强图片的识别率

enhancer = ImageEnhance.Contrast(im)

image_enhancer = enhancer.enhance(4)

# 放大图像 方便识别

im_orig = image_enhancer.resize((image_enhancer.size[0] * 2, image_enhancer.size[1] * 2), Image.BILINEAR)

# 识别

text = image_to_string(im_orig)

im.close()

im_orig.close()

# 识别对吗

text = text.strip()

return text

# 验证码识别,此程序只能识别数据验证码

if __name__ == '__main__':

getverify('trendYimg.png') # 注意这里的图片要和此文件在同一个目录,要不就传绝对路径也行

主函数

# -*- coding: utf-8 -*-

import base64

import yaml

from BD_Spider.bd_spider import BD_Crawler

def main():

stream = file('../config/setting.yaml', 'r') # 'document.yaml' contains a single YAML document.

pyconfig = yaml.load(stream)

bd_crawler = BD_Crawler(pyconfig['Authentication']['name'], base64.b64decode(pyconfig['Authentication']['pwd']),

pyconfig['chromedriver'], pyconfig['data_dir'])

values = bd_crawler.webcrawler(u'爱情')

print values

print 'end'

if __name__ == '__main__':

main()

定义配置文件 setting.yaml

Authentication:

name: [email protected]

pwd: XXXXXXXXXXX

chromedriver: C:\Program Files (x86)\Google\Chrome\Application\chromedriver.exe

data_dir: C:\Users\enlong\AppData\Local\Google\Chrome\User Data11