scrapy-redis安装与使用

文档:

https://scrapy-redis.readthedocs.org.

安装scrapy-redis

之前已经装过scrapy了,这里直接装scrapy-redis

pip install scrapy-redis

使用scrapy-redis的example来修改

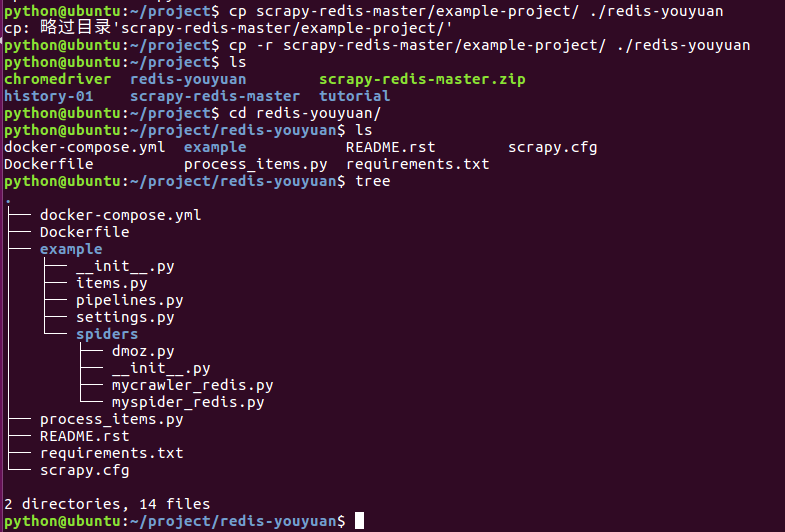

先从github上拿到scrapy-redis的example,然后将里面的example-project目录移到指定的地址

git clone https://github.com/rolando/scrapy-redis.git

cp -r scrapy-redis/example-project ./scrapy-youyuan

或者将整个项目下载回来scrapy-redis-master.zip解压后

cp -r scrapy-redis-master/example-project/ ./redis-youyuan

cd redis-youyuan/

tree查看项目目录

修改settings.py

下面列举了修改后的配置文件中与scrapy-redis有关的部分,middleware、proxy等内容在此就省略了。

https://scrapy-redis.readthedocs.io/en/stable/readme.html

注意:settings里面的中文注释会报错,换成英文

# 指定使用scrapy-redis的Scheduler

SCHEDULER = "scrapy_redis.scheduler.Scheduler"

# 在redis中保持scrapy-redis用到的各个队列,从而允许暂停和暂停后恢复

SCHEDULER_PERSIST = True

# 指定排序爬取地址时使用的队列,默认是按照优先级排序

SCHEDULER_QUEUE_CLASS = 'scrapy_redis.queue.SpiderPriorityQueue'

# 可选的先进先出排序

# SCHEDULER_QUEUE_CLASS = 'scrapy_redis.queue.SpiderQueue'

# 可选的后进先出排序

# SCHEDULER_QUEUE_CLASS = 'scrapy_redis.queue.SpiderStack'

# 只在使用SpiderQueue或者SpiderStack是有效的参数,,指定爬虫关闭的最大空闲时间

SCHEDULER_IDLE_BEFORE_CLOSE = 10

# 指定RedisPipeline用以在redis中保存item

ITEM_PIPELINES = {

'example.pipelines.ExamplePipeline': 300,

'scrapy_redis.pipelines.RedisPipeline': 400

}

# 指定redis的连接参数

# REDIS_PASS是我自己加上的redis连接密码,需要简单修改scrapy-redis的源代码以支持使用密码连接redis

REDIS_HOST = '127.0.0.1'

REDIS_PORT = 6379

# Custom redis client parameters (i.e.: socket timeout, etc.)

REDIS_PARAMS = {}

#REDIS_URL = 'redis://user:pass@hostname:9001'

#REDIS_PARAMS['password'] = 'itcast.cn'

LOG_LEVEL = 'DEBUG'

DUPEFILTER_CLASS = 'scrapy.dupefilters.RFPDupeFilter'

#The class used to detect and filter duplicate requests.

#The default (RFPDupeFilter) filters based on request fingerprint using the scrapy.utils.request.request_fingerprint function. In order to change the way duplicates are checked you could subclass RFPDupeFilter and override its request_fingerprint method. This method should accept scrapy Request object and return its fingerprint (a string).

#By default, RFPDupeFilter only logs the first duplicate request. Setting DUPEFILTER_DEBUG to True will make it log all duplicate requests.

DUPEFILTER_DEBUG =True

# Override the default request headers:

DEFAULT_REQUEST_HEADERS = {

'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,image/webp,*/*;q=0.8',

'Accept-Language': 'zh-CN,zh;q=0.8',

'Connection': 'keep-alive',

'Accept-Encoding': 'gzip, deflate, sdch',

}

查看pipeline.py

from datetime import datetime

class ExamplePipeline(object):

def process_item(self, item, spider):

item["crawled"] = datetime.utcnow()

item["spider"] = spider.name

return item

项目案例

以抓取有缘网 北京 18-25岁 女朋友为例

修改items.py

增加我们最后要保存的Profile项

class Profile(Item):

# 提取头像地址

header_url = Field()

# 提取相册图片地址

pic_urls = Field()

username = Field()

# 提取内心独白

monologue = Field()

age = Field()

# youyuan

source = Field()

source_url = Field()

crawled = Field()

spider = Field()

修改爬虫文件

在spiders目录下增加youyuan.py文件编写我们的爬虫,之后就可以运行爬虫了。

这里的提供一个简单的版本:

# -*- coding: utf-8 -*-

from scrapy.linkextractors import LinkExtractor

from example.items import Profile

import re

from scrapy.dupefilters import RFPDupeFilter

from scrapy.spiders import CrawlSpider,Rule

class YouyuanSpider(CrawlSpider):

name = 'youyuan'

allowed_domains = ['youyuan.com']

# 有缘网的列表页

start_urls = ['http://www.youyuan.com/find/beijing/mm18-25/advance-0-0-0-0-0-0-0/p1/']

pattern = re.compile(r'[0-9]')

# 提取列表页和Profile资料页的链接形成新的request保存到redis中等待调度

profile_page_lx = LinkExtractor(allow=('http://www.youyuan.com/\d+-profile/'),)

page_lx = LinkExtractor(allow =(r'http://www.youyuan.com/find/beijing/mm18-25/advance-0-0-0-0-0-0-0/p\d+/'))

rules = (

Rule(page_lx, callback='parse_list_page', follow=True),

Rule(profile_page_lx, callback='parse_profile_page', follow=False),

)

# 处理列表页,其实完全不用的,就是留个函数debug方便

def parse_list_page(self, response):

print "Processed list %s" % (response.url,)

#print response.body

self.profile_page_lx.extract_links(response)

pass

# 处理Profile资料页,得到我们要的Profile

def parse_profile_page(self, response):

print "Processing profile %s" % response.url

profile = Profile()

profile['header_url'] = self.get_header_url(response)

profile['username'] = self.get_username(response)

profile['monologue'] = self.get_monologue(response)

profile['pic_urls'] = self.get_pic_urls(response)

profile['age'] = self.get_age(response)

profile['source'] = 'youyuan'

profile['source_url'] = response.url

#print "Processed profile %s" % response.url

yield profile

# 提取头像地址

def get_header_url(self, response):

header = response.xpath('//dl[@class="personal_cen"]/dt/img/@src').extract()

if len(header) > 0:

header_url = header[0]

else:

header_url = ""

return header_url.strip()

# 提取用户名

def get_username(self, response):

usernames = response.xpath('//dl[@class="personal_cen"]/dd/div/strong/text()').extract()

if len(usernames) > 0:

username = usernames[0]

else:

username = ""

return username.strip()

# 提取内心独白

def get_monologue(self, response):

monologues = response.xpath('//ul[@class="requre"]/li/p/text()').extract()

if len(monologues) > 0:

monologue = monologues[0]

else:

monologue = ""

return monologue.strip()

# 提取相册图片地址

def get_pic_urls(self, response):

pic_urls = []

data_url_full = response.xpath('//li[@class="smallPhoto"]/@data_url_full').extract()

if len(data_url_full) <= 1:

pic_urls.append("");

else:

for pic_url in data_url_full:

pic_urls.append(pic_url)

if len(pic_urls) <= 1:

return ""

return '|'.join(pic_urls)

# 提取年龄

def get_age(self, response):

age_urls = response.xpath('//dl[@class="personal_cen"]/dd/p[@class="local"]/text()').extract()

if len(age_urls) > 0:

age = age_urls[0]

else:

age = ""

age_words = re.split(' ', age)

if len(age_words) <= 2:

return "0"

#20岁

age = age_words[2][:-1]

if self.pattern.match(age):

return age

return "0"